Marketers are releasing AI tools throughout innovative, targeting, bidding and reporting. AI is progressing fast, and promises of efficiency are almost everywhere. One reality cuts through the hype: AI suggests nothing without results. What issues isn’t having it in your pile however showing it drives quantifiable performance.

More material or quicker process aren’t enough. To justify AI financial investment, marketers have to reveal whether campaigns convert better, leads boost in high quality, brand name metrics lift or return on advertisement spend (ROAS) increases– and then validate that AI was straight responsible.

Specify the right efficiency question

Before measuring, clarify what AI is anticipated to impact. Start with particular, outcome-based concerns:

- Will AI-generated product descriptions increase mobile conversion rates compared to our present duplicate?

- Does AI-driven bidding process supply a lower expense per acquisition on our essential audiences than hand-operated bidding process did last quarter?

- Can AI-powered personalization drive higher repeat purchase rates contrasted to fixed e-mails?

Having a quantifiable theory sets the stage for straightforward evaluation. It additionally stops teams from complex activity with influence.

Dig deeper: Operationalizing generative AI for advertising impact

Establish standards and make use of structured contrast

Dimension starts with recognizing where you started. Record baseline metrics such as conversion prices, cost per lead, CLV or campaign activation times before AI is presented. Then, as you bring AI into the mix, develop direct comparisons:

- Run AI-driven creative alongside human innovative, maintaining whatever else equivalent.

- Test new AI-powered targeting on a subset of your target market, while others stay on tradition approaches.

Nonetheless, given that in digital marketing “whatever else equal” is rarely sensible, anticipate contamination and plan for it. Public auction and pacing formulas can change bid stress, distribution and stock allowance in ways that affect both examination and control groups across systems. Walled gardens are one instance, where AI bidding can surge via auctions and pollute holdouts.

Make up it and contain it. As an example:

- Log any kind of contamination risks and observations, such as CPM prices or pacing spikes.

- Divide your target market relatively, either arbitrarily or by geography and lessen any kind of crossover.

- Keep budgets, dates and pacing rules the very same throughout test and control.

- Run the test greater than when at different times.

Contrast pre- and post-AI results or set up head-to-head campaigns that make up these variables. After that you will have the ability to attribute differences to AI with much greater confidence.

Choose KPIs that show actual AI effect

KPIs need to match the duty AI plays in your service and stress end results that matter:

- Step-by-step earnings or sales credited to AI usage.

- Cost financial savings or efficiency gains connected to automation or AI-driven optimization.

- Quality enhancements such as uplift in client retention, brand name interaction or NPS, where AI is a direct input.

Use these together with operational metrics and constantly compare against your initial standard or an appropriate control group. Or else, it ends up being impossible to determine whether AI is driving results or simply including sound.

Validate and confirm origin– and check more than once

Recognition in AI measurement suggests isolating the step-by-step impact AI has on outcomes and confirming that the improvement did not take place by coincidence or through outside factors.

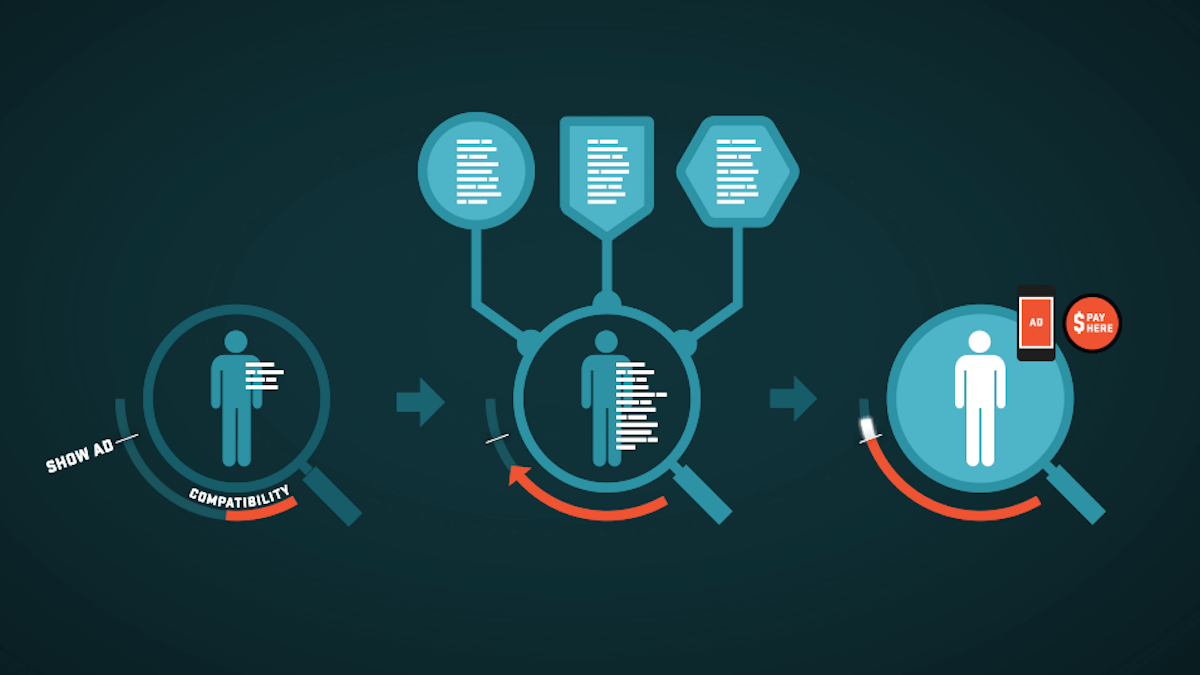

Incrementality testing is a robust strategy: roll out an AI-powered attribute, such as customization or bidding optimization, to just a random part of your target market. Keep every little thing else the same. If the target market exposed to AI experiences statistically considerable improvements in results compared to those who are not, you have evidence of origin.

A solitary test, nevertheless, is not enough. Anomalies, market changes or concealed variables can misshape results. For reliability, repeat experiments two or 3 times, preferably under different conditions or timeframes. Consistency across examinations provides you self-confidence that AI is the vehicle driver of gains, not good luck or coincidence.

Layer on lift researches, geo-based experiments or causal maker discovering models as required. Each round of recognition hones your capability to prove not just that AI worked as soon as, yet that it can continue to function under real-world conditions.

Dig deeper: 4 steps to start incrementality without overcomplicating it

Verify before you scale

The technique in contemporary advertising and marketing is relocating from “we attempted AI” to “we verified AI works below, for this purpose.” As soon as influence is measured and verified– whether through repeated lift researches, incrementality tests or KPI changes– marketers can scale AI with confidence, knowing where, why and just how it makes a difference.

Groups that bring this degree of self-control will certainly divide true improvement from buzz, constructing the proof required to safeguard more investment and maximize the advertising stack for long-term end results.

Update acknowledgment and develop continuous understanding

As AI thinks a bigger function in whatever from innovative choice to provide sequencing, attribution designs have to progress. Every AI-generated or AI-optimized decision ought to be clearly tracked. Feed the outcomes of experiments, lift examinations and KPI assesses back into acknowledgment systems to make sure that future projects show what has currently been verified.

Maintain an in-depth audit path that connects version variations, prompts, datasets and configuration changes to campaign results. Capture choice logs where feasible. This allows you to recreate results, run counterfactual analyses when efficiency shifts and hold systems accountable while fulfilling personal privacy and governance needs.

Dig deeper: Exactly How AI and ML link the acknowledgment disconnect throughout marketing networks

Do not just make use of AI– show it delivers

With AI now deeply ingrained in marketing process and client experiences, gauging its efficiency is non-negotiable. Treat it like any other efficiency lever. Establish clear end results, run structured tests and need repeatable evidence prior to you scale.

Maintain a living document of what you examined, how you managed for outside variables and what relocated as a result of AI. Fold those learnings right into attribution so AI’s influence shows up, not concealed. Use each cycle of testing and refinement to develop where AI belongs in innovative, media and lifecycle programs.

When leadership asks what AI is providing, you should have the ability to indicate causal lift, not enthusiastic relationships. If AI is working, show it. If not, optimize until it does. That is just how AI becomes a legitimate driver of advertising and marketing efficiency.

Gas up with cost-free marketing insights.

Adding authors are welcomed to create material for MarTech and are picked for their proficiency and contribution to the martech community. Our contributors function under the oversight of the content personnel and payments are looked for quality and importance to our viewers. MarTech is owned by Semrush Factor was not asked to make any kind of straight or indirect points out of Semrush The opinions they reveal are their very own.

Advised AI Marketing Devices

Disclosure: We may earn a compensation from affiliate web links.

Initial protection: martech.org

Leave a Reply